# Function to download and process subtitles

download_and_process_subtitles() {

local video_url="$1"

local base_filename="$2"

# Download subtitles using yt-dlp

yt-dlp --write-auto-subs --sub-format "vtt" --skip-download -o "${base_filename}.%(lang)s.%(ext)s" "$video_url"

# Find the downloaded subtitle file

local subtitle_file=$(ls ${base_filename}.*.vtt | head -1)

# Check if the subtitle file exists

if [ -f "$subtitle_file" ]; then

# Process the subtitle file to remove tags, timestamps and duplicate lines

perl -ne 'print unless /<.*>/ or /^\s*$/ or /-->/' "$subtitle_file" | awk '!seen[$0]++' > "${base_filename}.txt"

echo "Processed subtitle saved as ${base_filename}.txt"

else

echo "No subtitle file found."

fi

}

# Variables

VIDEO_URL="video url"

BASE_FILENAME="basename"

# Call the function

download_and_process_subtitles "$VIDEO_URL" "$BASE_FILENAME"

Away From Keyboard | |||||

| Home | About | Posts | Tags | Projects | RSS |

use Obsidian on iOS

[[Obsidian]] works amazingly well on iOS, it is really satisfying to write notes on mobile with it. Along with iOS shortcuts and automation, using Obsidian streamlines your mobile note-taking experience like never before.

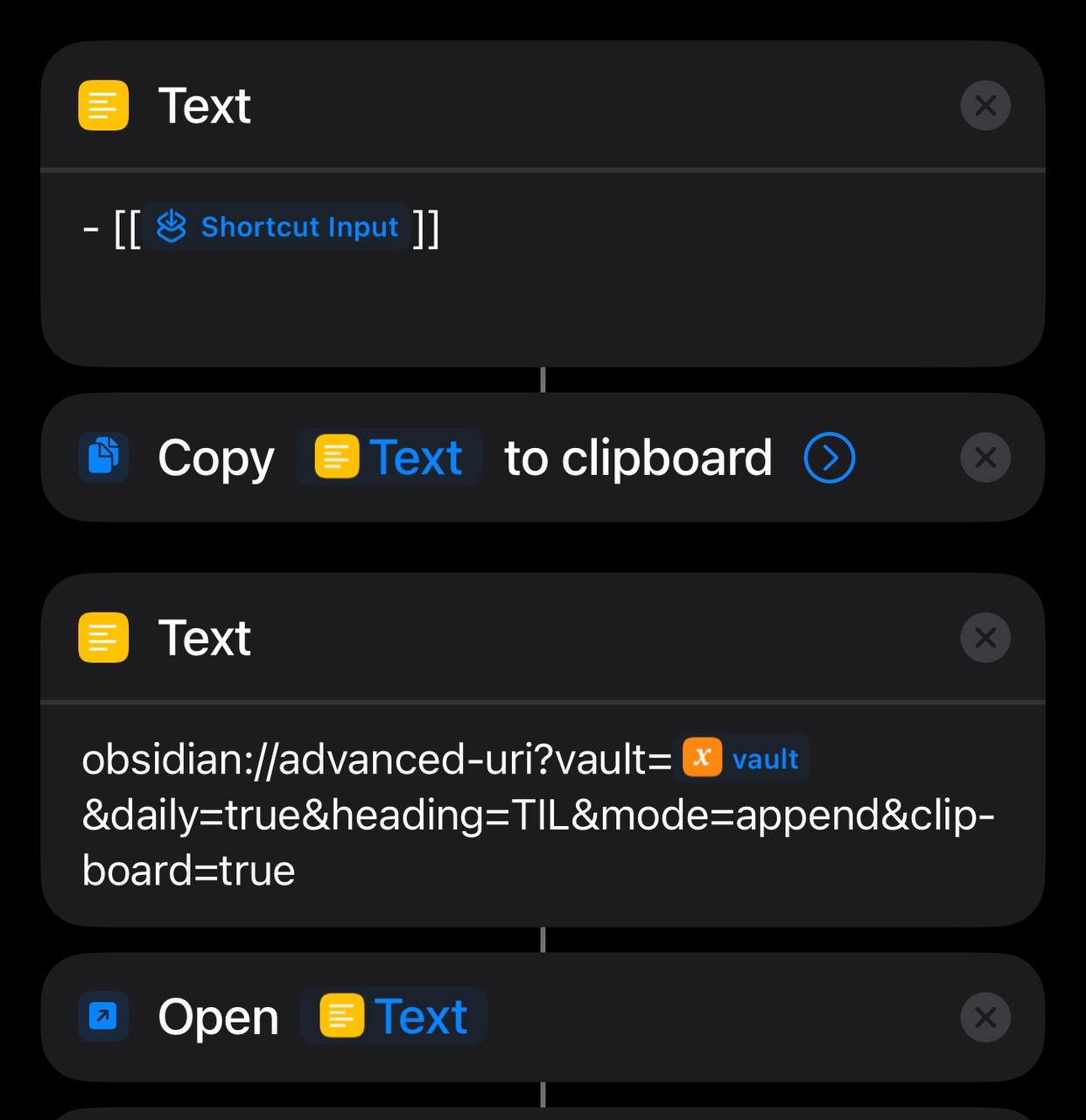

Use [[iOS shortcut]] to automate tasks

Obsidian Advanced URI | Obsidian Advanced URI

This plugin exposes api to Obsidian via schema URL, combing it with iOS shortcuts make it possible to perform complex automation, like creating note, appending note and reordering list (via Sort & Permutation plugin).

There is a Call Command parameter for calling command from other plugins, which is the key component to automate with iOS shortcuts.

example: add to list and reorder list alphabetically

select some text and use share menu, select the shortcut.

add to the heading TIL on daily note

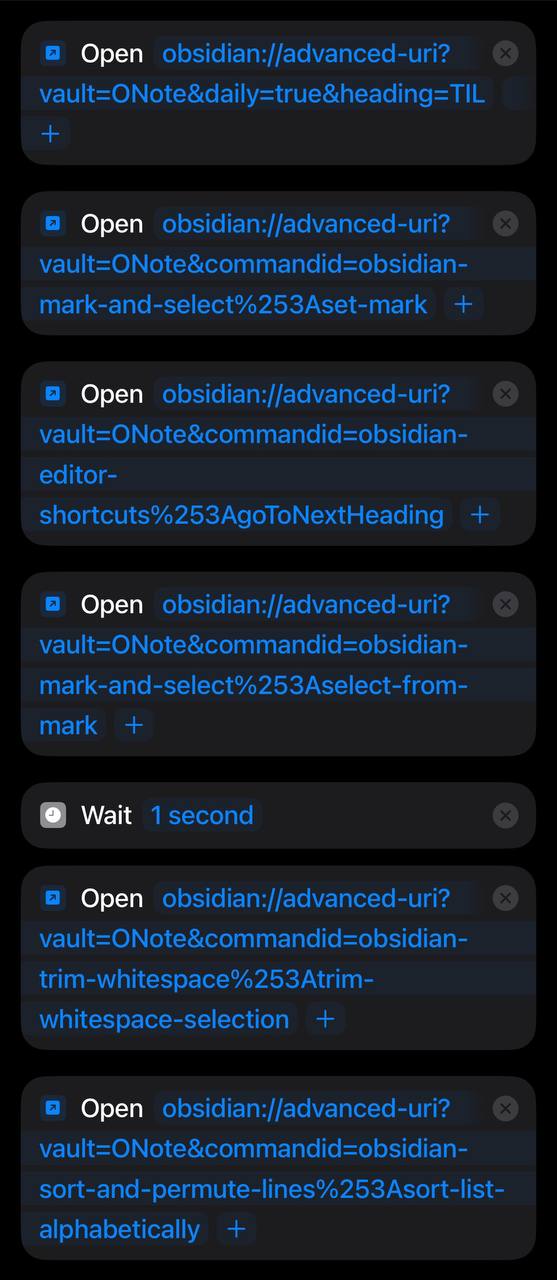

use commands from various plugin to do following steps:

- open the note and place cursor at specific heading

- set mark, via mark and select

- go to next heading, via Code Editor shortcuts

- go to previous line

- go to end of line

- trim white space, via trim whitespace

- reorder list, via Sort & Permute lines

obtain the command id

command id can be obtain by using Advanced URI: copy URI for command on the Command Palette

Actions URL

Also look at the Actions URL I find it works more reliably and has the ability to run dataview query

same workflow on macOS

The same shortcut workflow used on iOS can be reused on macOS via services, amazing cutting the boilerplate of manually copy & pasting note taking process.

CSS for multiple fonts

Font Atkinson Hyperlegible

Download the Atkinson Hyperlegible Font | Braille Institute

Use this excellent font for low vision reading , perfect for using with Obsidian on iPhone!

Custom Font Loader

Enable Custom Font Loader plugin, enable multiple fonts.

Custom CSS of using different fonts for default and code block.

:root * {

--font-default: Atkinson-Hyperlegible-Regular-102a;

--default-font: Atkinson-Hyperlegible-Regular-102a;

--font-family-editor: Atkinson-Hyperlegible-Regular-102a;

--font-monospace-default: Atkinson-Hyperlegible-Regular-102a,

--font-interface-override: Atkinson-Hyperlegible-Regular-102a,

--font-text-override: Atkinson-Hyperlegible-Regular-102a,

--font-monospace-override: Atkinson-Hyperlegible-Regular-102a,

}

.reading * {

font-size: 20pt !important;

}

/* reading view code */

.markdown-preview-view code {

font-family: 'Iosevka';

}

/* live preview code */

.cm-s-obsidian .HyperMD-codeblock {

font-family: 'Iosevka';

}

/* inline code */

.cm-s-obsidian span.cm-inline-code {

font-family: 'Iosevka';

}

references

How to increase Code block font?! - #2 by ariehen - Custom CSS & Theme Design - Obsidian Forum

How to update your plugins and CSS for live preview - Obsidian Hub - Obsidian Publish

Edward Gibson Discusses Language Processing on Lex Fridman Podcast #426

I learned that surprisingly, the brain areas activated during language processing and coding are not the same, nor do they overlap.

Additionally, human languages tend to minimize word dependency distance, which has provided me with valuable insight into the structure of the German language. In German, there’s a rule that places the verb at the end of a subordinate clause.

Initially, this might seem odd, but it becomes logical from the perspective of minimizing word dependency distance. Consider the sentence:

“Die neuen Richtlinien legen fest, dass die Projekte je nach ihrer ökologischen Bedeutung eingestuft werden könnten.”

In this example, the words in the latter part of the subordinate clause are closely positioned, making the sentence easier to process.

imperfect and perfect in grammatical tenses

The terms “imperfect” and “perfect” in grammatical tenses refer to specific aspects of actions in terms of their time frames and completeness, and these terms can be somewhat confusing because they don’t align with the everyday meanings of “perfect” and “imperfect.”

Imperfect (Präteritum)

1. Meaning in Grammar

In German, the “imperfect” tense, known as Präteritum, is primarily used in written language to describe past events, particularly in narratives. It corresponds to both the simple past and the imperfect in English.

2. Etymology and Usage

The term “imperfect” comes from Latin, where it means “not completed.” It refers to an action that was ongoing or repeated in the past. This meaning carries into English and German grammar but focuses more on the narrative style of recounting past events without specifying whether the action was completed. In German, it is used less frequently in spoken language and more in writing, as it gives a formal, literary quality to the text.

Perfect Tense

1. Meaning in Grammar

The perfect tense in German (Perfekt) is used to describe actions that were completed in the past but are relevant to the present. It is the most common way to convey past events in spoken German.

2. Etymology and Usage

The term “perfect” in grammatical usage is derived from Latin “perfectus,” which means “complete.” The perfect tense is used to indicate that an action in the past has been completed, and its effects or results may still be relevant at the present time. This is why it’s called “perfect” – it signifies that the action is fully complete or “perfected.”

Why These Terms?

- The usage of “perfect” and “imperfect” in grammar does not relate directly to their everyday meanings. In everyday language, “perfect” suggests something flawless or ideal, while “imperfect” suggests something incomplete or flawed. In grammatical terms, however, “perfect” relates to the completion of an action, and “imperfect” to the ongoing nature of an action in the past.

Summary

- Imperfect (Präteritum) is used for describing past actions typically in a narrative, literary context.

- Perfect (Perfekt) is used for indicating actions that were completed in the past but have a connection to the present, commonly used in spoken German.

These terms help to categorize and teach the various ways different languages express time and aspect in verb tenses, even though they might seem counterintuitive based on their names alone.

kündigen and ankündigen

kündigen and ankündigen

kündigen: to terminate, to quit

ankündigen: to declare

“Ankündigen” is a separable verb and consists of the prefix “an-” and the base verb “kündigen,” which on its own means “to terminate” or “to quit,” usually in the context of employment or contracts. The prefix “an-” changes the meaning of the base verb to imply the start or the announcement of something.

how to understand the connection

Understanding how the prefix “an-” alters the meaning of the base verb “kündigen” in German can indeed seem challenging, especially when the meanings of the prefix and base verb appear quite distinct. Here’s a more intuitive way to comprehend this transformation:

Linguistic Analysis

Base Verb “kündigen”: Originally means “to terminate” or “to give notice.” This verb indicates ending something formally, such as a contract or employment.

Prefix “an-”: This prefix in German often signifies the beginning of an action or adds a sense of initiation to the base verb. It can also denote attachment or proximity.

Conceptual Bridge

The key to understanding “ankündigen” lies in bridging the concepts of ‘starting’ and ’ending’. When you “kündigen” (terminate), you’re essentially bringing something to an end. However, many endings naturally lead to new beginnings. Adding “an-” to “kündigen” shifts the focus from the end of one phase to the initiation of another. In essence, “ankündigen” involves initiating the process of making others aware of a new beginning or change. It’s about declaring the start of something new that follows the end of something old.

Example in Context

Consider a situation where a company decides to terminate a product line (“kündigen”). The announcement of this decision (“ankündigen”) marks the beginning of a new phase for the company, such as the introduction of a new product line or strategy. Here, “ankündigen” does not just communicate the termination but also sets the stage for what is to come next.

Memory Tips

Visualize a Door: Imagine closing one door (“kündigen”) and immediately turning to open another (“ankündigen”). The act of announcing is like opening that new door.

Think of a Sequel: When a film ends, the announcement of a sequel is akin to “ankündigen.” It signals the start of a new narrative following the conclusion of the previous one.

By visualizing the transition from ending to beginning and understanding “an-” as a marker of initiation, “ankündigen” can be seen as a natural and intuitive extension of “kündigen.” This conceptual connection helps grasp the transformation effected by the prefix and aids in memorizing the usage of these verbs in German.

Origin of the word Tschüss

But there is another form of good-bye that is very commonly used, although mostly with good friends. It is very casual. It comes from a long time past when it was fashionable to use the French word when bidding farewell to friends: adieu. In the course of time, and with people from all over the German-speaking world pronouncing and mispronouncing the word, it somehow got an s attached to it. Then it lost its first syllable. And in time it became simply Tschis (CHUESS).

Learn German in a Hurry

memorize wetter vocabulary

different weather phenomena along with their genders.

- die Sonne (the sun) - Feminine

- der Regen (the rain) - Masculine

- der Schnee (the snow) - Masculine

- das Gewitter (the thunderstorm) - Neuter

- die Wolke (the cloud) - Feminine

- der Blitz (the lightning) - Masculine

- der Sturm (the storm) - Masculine

- der Wind (the wind) - Masculine

Mnemonic Device

Feminine (die): Think of Sun and Clouds as nurturing elements in nature, traditionally associated with feminine qualities. Hence, “die Sonne” (sun) and “die Wolke” (cloud) are feminine.

Masculine (der): Rain, Snow, Lightning, Storm, and Wind can be associated with strength and force, often seen as masculine traits. So, “der Regen” (rain), “der Schnee” (snow), “der Blitz” (lightning), “der Sturm” (storm), and “der Wind” (wind) are masculine.

Neuter (das): Thunderstorm is a mix of various elements (rain, lightning, thunder). You can think of it as a complex phenomenon that doesn’t fit into a single category, thus making “das Gewitter” (thunderstorm) neuter.

AI时代下的知识管理演进:从搜索引擎到LLM问答,再到全面的自动化

过去几十年里,信息技术的迅猛发展彻底改变了我们管理和利用知识的方式。从谷歌等搜索引擎的崛起到大型语言模型( LLM )如 ChatGPT 的出现,每一个技术进步都对知识管理的理念和实践产生了深远影响。现在,随着 AI技术的进一步成熟,我们正步入一个集成化和自动化知识管理的新时代。

搜索引擎时代:重视知识的结构和脉络

谷歌等搜索引擎变化了人类搜索已有知识的方式,博闻强识的重要性已经大为降低。在搜索引擎时代,人们的主要挑战是从海量的信息中找到所需的知识。搜索引擎降低了个人对于知识点具体细节记忆的依赖,如果有需要可以用搜索引擎补全细节。这使我们更加重视知识的结构和脉络。知识不再是孤立的事实,而是一个互相联系的网络。在这个时代,有效的知识管理意味着能够理解和记忆知识点的连接,通过搜索引擎快速地定位和获取信息。

LLM 的兴起:重视理解问题的能力和方法论

大型语言模形 LLM 如 ChatGPT 的出现又一次改变了我们检索和管理知识的方式。 LLM不仅能提供信息,更重要的是,它们能够理解复杂的查询,感知提问的上下文,提供更加深入和全面的回答,我认为这会促使人们开始重视提问的能力和用于理解问题本质的方法论。

这要求用户不仅要了解他们正在寻找的信息的性质,而且要能够清晰、准确地表达他们的问题。在这个阶段,知识管理变得更加动态和互动,强调的是理解问题的核心,以及如何提问来有效地利用 LLM获取和理解信息。

AI 辅助的知识管理

展望未来,我觉得 AI 的角色将会从简单的信息加工转变为主动的知识管理。 AI辅助的知识管理将会有以下三个特点:自动化的数据收集,智能信息提取与分析,个性化的知识管理。

自动化的数据处理

LLM 技术将能够自动收集、分类和整理来自各种渠道的数据。这包括结构化数据(如数据库中的记录)和非结构化数据(如社交媒体帖子、视频内容)。 LLM 可以提取关键信息,生成摘要,初步加工,分类,并将其转化为结构化的数据格式。

智能信息提取与分析

LLM 可以从用户自己收集的大量数据中提取有价值的信息,并进行深入分析。这不仅限于数字数据,还包括对文本、图像甚至语音信息的理解。通过这种方式, LLM 能够帮助人们获取关于数据的深度洞察,发现潜在的问题和价值。比如 ChatGPT 的 Code Interpreter 就是这方面的一个尝试。

本地运行 LLM和个性化的知识管理

未来的知识管理系统将能够根据用户的特定需求和偏好来定制内容。 AI 将基于用户的行为、历史交互和偏好来推荐相关的资料和信息,从而提供更加个性化的体验。 LLM本地化运行己经成为趋势,这意味着我们不用担心其他人会获取到这些隐私信息。

未来的关键能力:信息分析与综合判断能力

设想在未来,当每个人都拥有智能助手来辅助知识收集、整理、分析,并且可以通过强大的搜索引擎随时检索信息细节,那么在这样一个技术高度成熟的时代,什么能力会变得重要?

我尝试设想一下:

信息分析与综合判断力

在海量信息和数据的环境中,信息分析与综合判断力会变得至关重要。这 不仅是对信息的简单接受,而是需要对信息进行深度分析和评价

这个能力包括

- 信息分析力:能够理解和分析信息的能力,包括对数据进行深入挖掘,理解其背后的意义和潜在的联系。

- 逻辑推理:基于收集到的信息进行合理的推理。

- 综合判断力:综合不同信息源和观点,形成一个全面、平衡的观点。这不仅包括对事实的判断,还包括伦理、价值和实用性的考虑。

- 批判性思维:虽然不是唯一元素,但批判性思维仍是此技能的重要组成部分。它涉及对信息的质疑、检验和反思。

创造性和创新能力

当基础性的知识管理和数据分析由 AI完成时,人类的创造性思维将成为不可替代的宝贵资源。创新不仅涉及新想法的产生,还包括如何将这些想法转化为实际的产品、服务或解决方案。

人际交往和沟通能力

在技术日益发达的世界里,人际交往和沟通能力仍然是关键。这包括有效的沟通、团队协作、情感智力和领导力。这些技能对于建立人际关系、推动团队合作和领导创新项目至关重要。

关键能力

在未来,基础的信息处理和数据分析任务将由 AI 承担,但人类的高阶思维能力、创新能力、人际交往能力依然无可取代。至少目前 transformer 架构的 LLM还没有展示出 system 2 thinking 式的思考能力。这些能力在未来高度依赖数据和信息的社会中尤为重要。

结论

从搜索引擎到 LLM ,再到未来的 AI集成化和自动化,知识管理将会有一场深刻的变革。每一次技术进步都要求我们以新的方式理解和利用知识。未来的知识管理将更加自动化,智能、和个性化,但这也意味着我们需要不断适应和学习,以充分利用这些新工具的潜力。

Docker daemon ports vs forward container ports

Docker daemon ports vs forward container ports

daemon ports

Docker daemon ports: add something like tcp://0.0.0.0:9999 in /etc/docker/daemon.json

It’s about the Docker daemon’s ability to accept commands (like starting/stopping containers, pulling images, etc.) over the network.

forwarding container ports

This is about exposing a specific port of a running container to the host or the outside world, allowing network traffic to reach the service running inside the container.

summary

In summary, setting the Docker daemon to listen on certain TCP ports is about remote management of the Docker engine itself, while forwarding ports for a container is about allowing external access to services running inside that container.

Leveraging Emacs 29.1 Use-Package and Other emacs lisp tips

built-in use-package

As a seasoned Emacs user, I’ve been eagerly anticipating the built-in arrival of use-package in version 29.1. And now it’s finally here! This declarative configuration tool has already become my go-to for confining all the chaotic Emacs configurations, making everything more organized and manageable. So, if you haven’t already, I’d wholeheartedly recommend upgrading your Emacs to the latest version 29.1.

Tips for Checking Package Installation

When you’re neck-deep in code, it’s quite common to forget whether you’ve installed a particular package or not. Emacs has got you covered with several commands:

- featurep: Use this if a package ends with provide.

- fboundp: This comes in handy when you need to check if a certain function is defined.

- bound-and-true-p: Use this to confirm whether a global minor mode is both installed and activated.

The Power of cl-letf

I’ve found cl-letf to be incredibly useful when I need to dynamically and temporarily override functions and values defined externally. It’s particularly handy when paired with advice, allowing me to alter the behavior of third-party packages without meddling with their source code.

Here’s a practical example of how to override a function defined in a package. The code modifies the behavior of original-split-window-horizontally inside create-window so that no matter what argument it receives, a fixed width is used:

(defun my-create-window-advice (orig-fun &rest args)

"Advice to modify the behavior of `split-window-horizontally' in `create-window'."

(let ((original-split-window-horizontally (symbol-function 'split-window-horizontally))

(fixed-width 20)))

(cl-letf (((symbol-function 'split-window-horizontally)

(lambda (&optional size)

(funcall original-split-window-horizontally fixed-width))))

(apply orig-fun args))))

(advice-add 'create-window :around #'my-create-window-advice)

Embracing thread-first and thread-last Macros

One of my favorite features of Emacs version 25 and onwards is the built-in thread-first and thread-last macros. These can prove immensely useful when dealing with complex data transformations - they help maintain clean and readable code.

In Emacs Lisp, the thread-first and thread-last are powerful tools for improving the readability of function call sequences. They allow for a more intuitive and linear style of writing nested function calls, especially useful in situations where you have multiple operations that need to be applied in sequence.

https://codelearn.me/2023/05/28/emacs_thread_macros.html

Summary

After a decade of using Emacs, it continues to be an indispensable part of my programming arsenal. Once one has really recognized the extensibility of emacs, it’s hard to not miss it every time using another editor.